Hello Readers!!

In this blog I’m going to talk about one of the Configuration Management tool i.e. Puppet.

Puppet-Isn’t it a funny name for a software. As we all know that puppet-a person, group, or country under the control of another, the same is implemented in the software in which agents are controlled by the master. That’s how it is named!!!

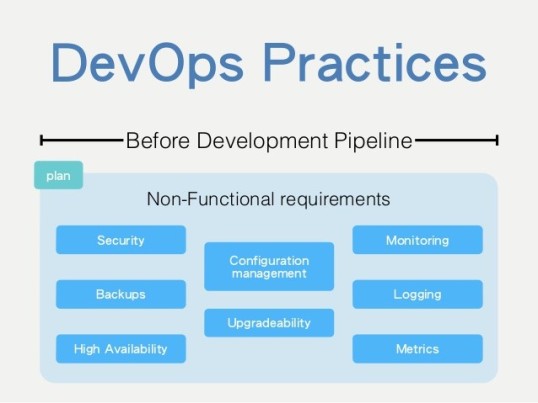

In today’s world, it is one of the highly used configuration management tool. I hope everyone is familiar with Configuration Management, if not don’t worry!!

I have given a clear idea on what is Configuration Management and its structure in my previous blog, please go through it here for better understanding.

For people who are not aware of Configuration Management, here is the small introduction of it.

What is Configuration Management?

Configuration management is unique identification, controlled storage, change control, and status reporting of selected intermediate work products, product components, and products during the life of a system.

It is the detailed recording and updating of information that describes an enterprise’s hardware and software. Its advantage is that the entire collection of systems can be reviewed to make sure any changes made to one system do not adversely affect any of the other systems.

Many customers use Puppet as a configuration management tool in conservative, compliance-oriented industries such as banking and government. The nice thing about Puppet is that it grows and scales as the team and infrastructure grow and scale.

What is Puppet?

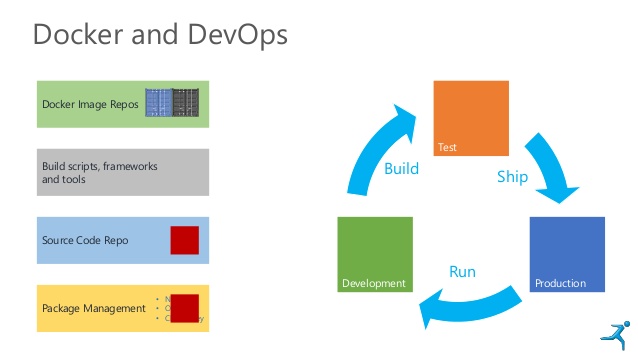

Puppet is an open-source IT automation tool developed by Puppet Labs. It’s written in Ruby; composed of a declarative language for expressing system configuration; a client and a server for distributing it; and a library for realizing the configuration. Puppet helps in automation, deployment and scaling of applications in the cloud or on site.

The basic design objective of Puppet is to come up with a powerful and expressive language backed by an influential library that makes to write own server automation applications in just a few lines of code. Its intense adaptability and open source license lets us to add up the required functionality.

Why Puppet?

Puppet has been developed to benefit the sysadmin community in building up and sharing of all mature tools, which prevent the replication of a problem. It is extremely powerful in deploying, configuring, managing and maintaining a server machine.

The Puppet usage accelerates work as a sysadmin, as it supervises and handles all the details. It is easy to download the code from other sysadmin which help to get work done faster. Most of the Puppet implementations make use of one or more modules already developed by others. One can find hundreds of modules developed and shared by the community. Puppet’s functionality is framed as a heap of individual layers. Each layer is duty-bound to a fixed aspect of the system with a tight control on how information transfers between these layers.

How Puppet Works?

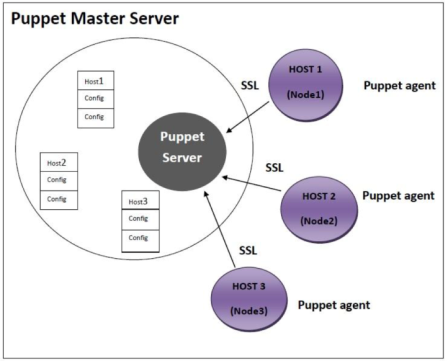

Puppet in general is used in client/server architecture. The Puppet agent acts as a daemon that runs on all the client servers where configurations are required, and the servers to be managed by Puppet. Now all the clients to be controlled will have puppet agent installed in their systems. This puppet agent also known as a node in Puppet will have a server designated as the Puppet master.

So, what does puppet agent and puppet master do?

Puppet Master: This machine restrains the entire configuration for different hosts, and runs as a daemon on this master server.

Puppet Agent: This is the daemon that will run on the entire server that is to be managed with the help of Puppet. At a specific time interval, the Puppet agent will go and inquire the configuration from the puppet master server.

The connection network between the puppet agent and master is built in a secure encrypted channel with the support of SSL.

From the above diagram it is clear that puppet master server has all the configuration options available for Host 1 or Node1, Host 2 or Node 2 and Host 3 or Node 3.Following steps are always followed whenever a puppet agent of any node makes up a connection between a node and a puppet master server to fetch the data.

Step 1: Every time a client node makes a connection to the master, the master server examines the configuration to be applied to the node.

Step 2: The Puppet master server takes and assembles all resources and configurations be applied on the node, and brings it together to make a catalog. Now, this puppet is given to the puppet agent of the node.

Step 3: According to the catalog, the Puppet agent will apply configuration on the node and then reply. It will also submit the report of the configuration applied to the puppet master server.

Conclusion

Puppet is both a simple and a complex system. It is composed of several moving sections wired all together quite loosely. It is a structure that can be used for all configuration problems.

Puppet refers to the two different things.

• The language in which code is written.

• The platform that manages infrastructure.

Puppet is basically modeling the Infrastructure as Code. Puppet code is easily stored and re-used. DevOps team can use the same manifests to manage systems from the laptop development environment all the way to production which can yield big improvements in deployment quality.

Thank You for reading!!!